A New Frontier for Application Risk

AI adoption is happening everywhere. Some may say it’s overhyped, but one thing we can all agree on is that AI is unavoidable. Applications that once relied only on open-source packages now pull in pre-trained models, machine learning libraries, and make calls to external APIs that are powered by large language models (LLMs). Hugging Face, PyTorch, OpenAI, Gemini, Claude… the list keeps growing. These tools bring incredible capabilities, but they also introduce risks that often remain invisible.

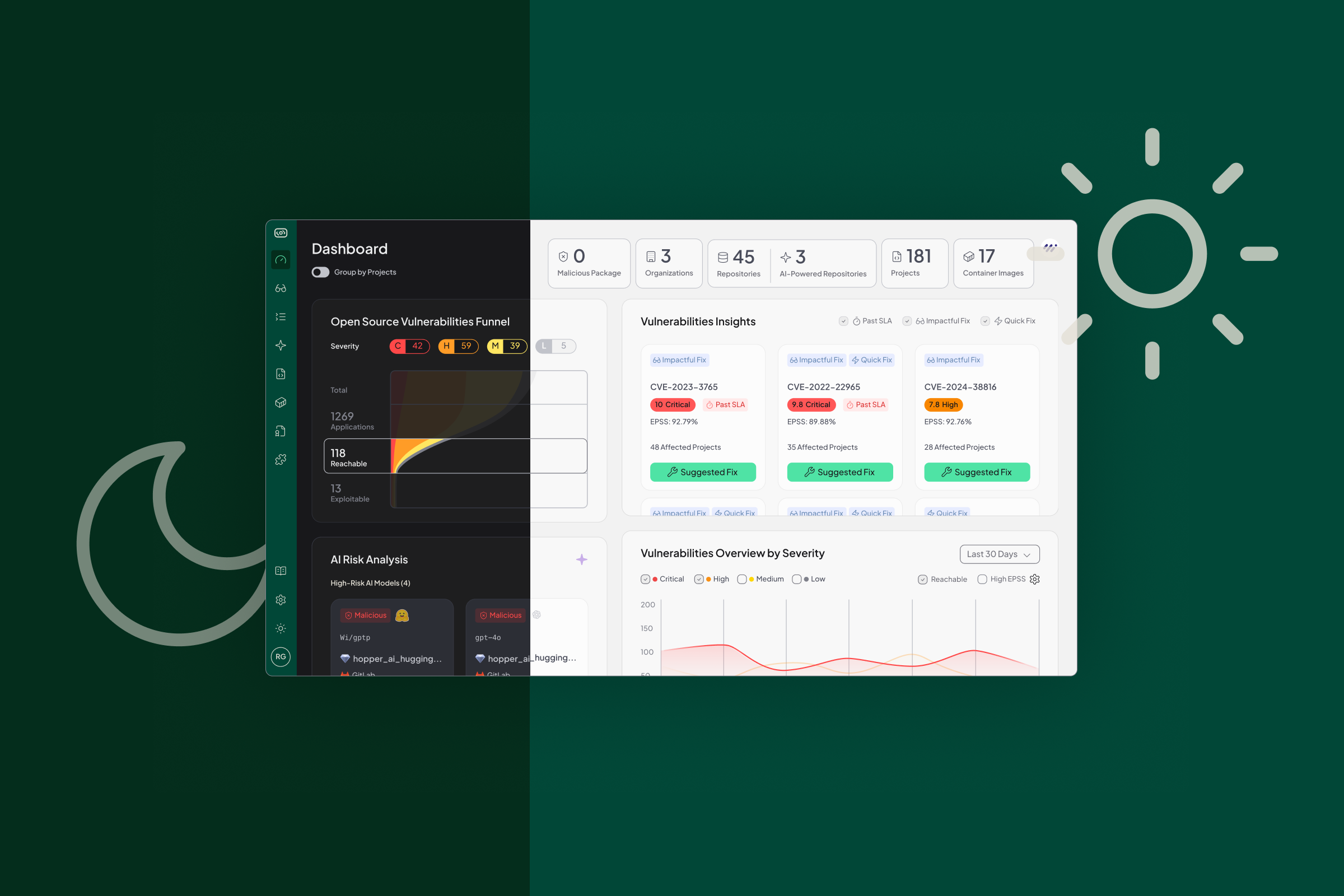

Many teams can’t answer basic questions. Which models are embedded in their code? How are those models being utilized? What data is flowing through them? Without clear answers, governance and security gaps start to grow. This is exactly what Hopper’s new AI-BOM and Risk Analysis features were built to address.

From Black Box to a Complete AI Bill of Materials

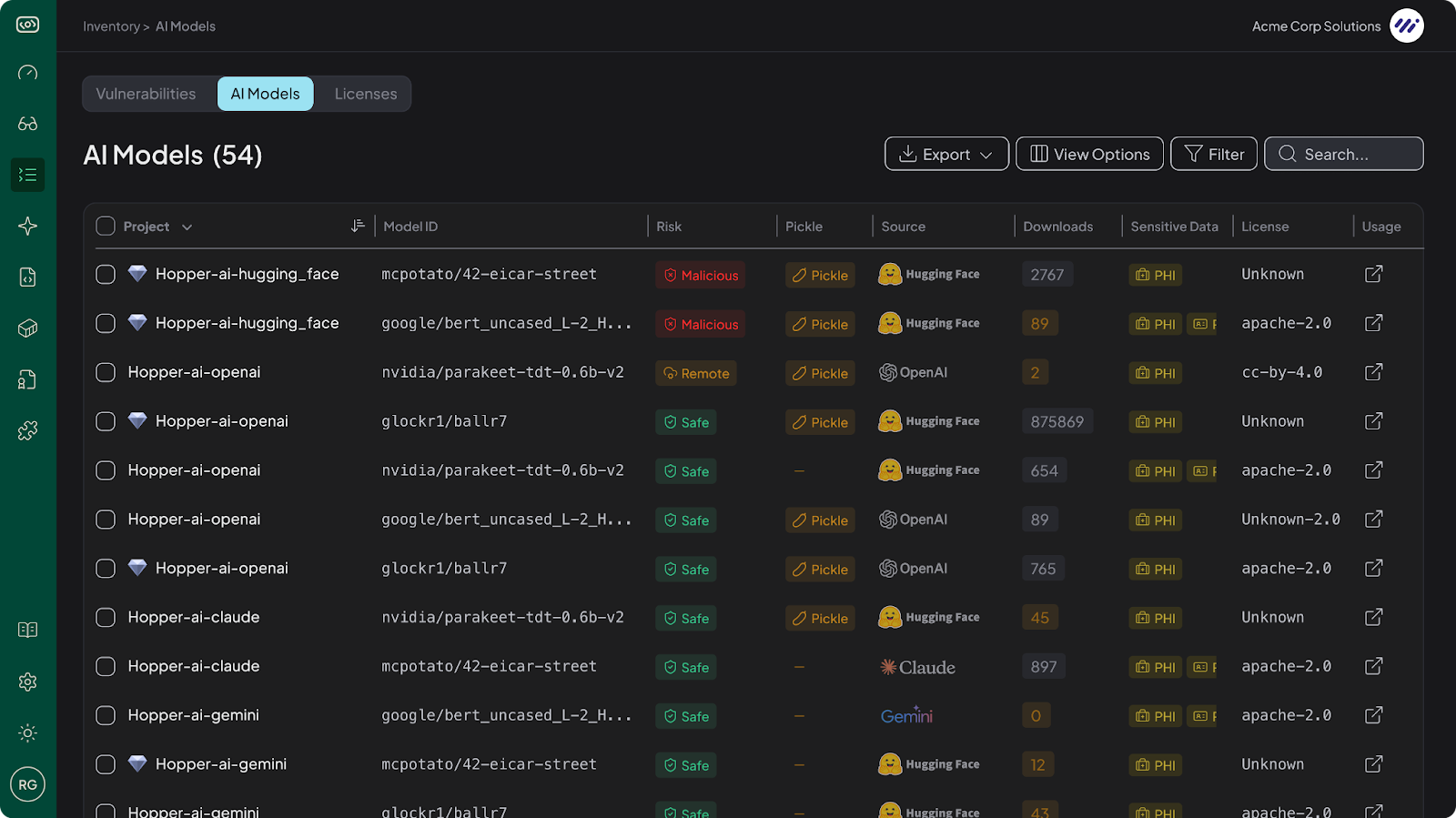

Hopper now automatically detects AI-related packages, embedded models, SDKs (Software Development Kits), and external models accessed through API calls, across your software stack and maps where they are used. The result is a complete AI Bill of Materials (AI-BOM), giving teams the same clarity around models that they already expect for open-source libraries.

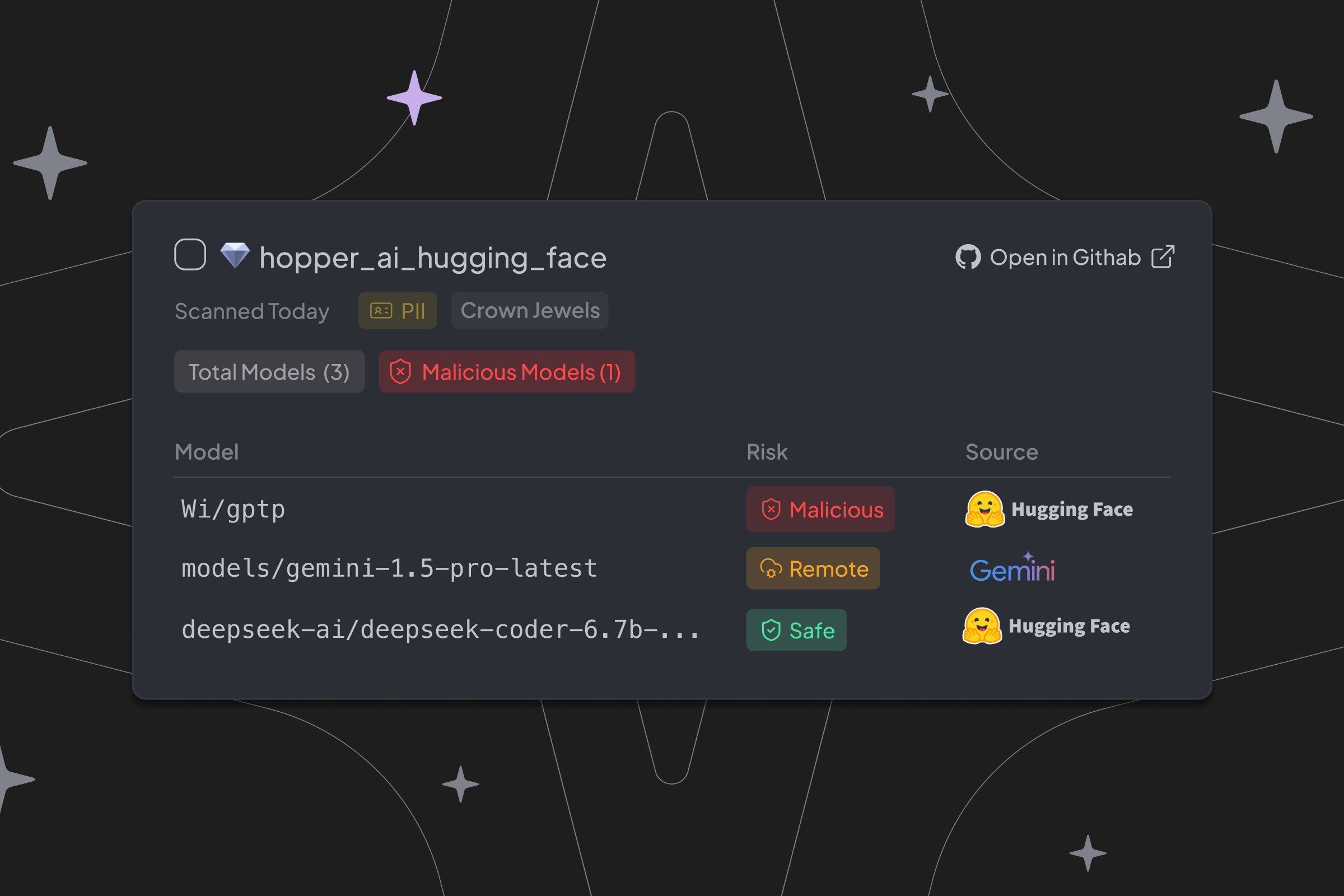

For all identified models, Hopper traces them directly to the repository, file, and even line of code where they appear. Unsafe defaults, undocumented behaviors, or risky loading patterns like Pickle are exposed before they create problems. For external models, Hopper uncovers connections to services such as OpenAI, Claude, or Gemini and shows exactly how applications are sending data to them.

⚠️ Pickle Warning: Models serialized with Python’s pickle module can execute arbitrary code during loading. This makes them a high-risk vector for supply chain attacks and malicious payloads.

It’s a level of transparency that hasn’t been available before. Now teams can see what models are actually in use, not just what they think is in use.

Why Blind Spots Around AI Hurt Everyone

AI is no longer a niche feature. It has become a core dependency in modern software. Yet traditional security scanning tools rarely account for how models are integrated or the risks they may carry. That creates blind spots for multiple stakeholders inside the business.

Security teams need to detect malicious or unsafe models before they make their way into production. Privacy leaders must ensure model usage doesn’t violate internal policies or external regulations. And engineering leaders need a clear view of how AI is spreading across their environments, including taking measures to secure AI agents.

Without that visibility, organizations are left making decisions in the dark. Hopper changes that by providing context, evidence, and clear mapping for every model in use.

With Hopper, you get:

- A complete AI Bill of Materials (AI-BOM) across all applications

- Identification of unsafe, unsupported, or malicious models

- File- and line-level mapping of all detected models

- Detection of risky behaviors like insecure deserialization (e.g., pickle)

- Visibility into external API usage, including OpenAI, Claude, and Gemini

- Insights into which applications send sensitive data into AI services

- Context-rich evidence that developers and security teams can act on

Seamless AI Coverage in the Hopper Workflow

This new capability brings AI model and library detection into the same streamlined Hopper experience used for open source risk and remediation. There is no new process to adopt. Just expanded insight within the workflows teams already trust.

- No agents or runtime integrations required

- Automatic detection and continuous coverage

- Context-rich evidence developers trust

- Support for regulatory requirements tied to AI and data governance

Ready to Explore Your AI Exposure?

Whether your teams are actively building with AI or simply pulling in hidden dependencies, Hopper makes it possible to see what is really going on inside your applications. We don’t just tell you if AI is being used, we tell you how and where it is in your environment.

From hidden libraries to embedded models, from malicious models to unsafe serialization, Hopper delivers precise, actionable visibility with zero friction.