Today’s development stack looks nothing like it did a decade ago; modern software is built from open-source components and AI models. This shift has fundamentally changed how code is written, assembled, and secured. While it enables teams to build faster, it also introduces new forms of risk that traditional application security (AppSec) tools were never designed to handle.

At Hopper, we believe securing modern software requires a new foundation. Today, we are excited to announce four AI-powered AppSec products that bring clarity, intelligence, and speed to every stage of the software development lifecycle.

Why the Industry Needs a New Approach to AppSec

AI is changing both how software is written and what software is made of. Applications no longer just consume open-source libraries. They now embed AI models, call external model APIs, and rely on AI-generated code. These changes introduce entirely new categories of supply chain risk.

Security teams today are facing critical blind spots:

1. AI-generated Code

AI coding assistants often suggest open-source packages without checking if they exist, whether they are secure, or if they are maintained. Some suggestions are hallucinated, leading developers to unknowingly install malicious lookalikes. Others may contain known vulnerabilities or be outdated and unsupported. Hopper identifies these risks early and stops them before they reach your codebase.

2. Embedded AI Models

Libraries such as Hugging Face’s ‘transformers’ make it easy to load and run AI models with just a few lines of code. These models can be introduced directly or bundled within third-party packages. They often include unsafe defaults, risky loading mechanisms such as unguarded ‘pickle’ deserialization, or undocumented behavior. Hopper identifies embedded models, analyzes their risks, and traces them back to the exact repository, file, and line of code responsible for their inclusion.

3. External Model Access

Applications are increasingly connected to external AI services such as OpenAI, Claude, and Gemini. These APIs process sensitive data and often play a role in core product functionality. However, their usage is rarely surfaced in traditional AppSec workflows. Hopper helps teams uncover and assess these external model interactions and their associated risks.

These challenges require full visibility. Security leaders need to understand which models and packages are being used, where they are integrated, and what risk they carry. This is the modern code-to-cloud problem for AI-powered applications. Hopper provides the context and analysis to solve it.

Introducing Hopper’s AI-Powered AppSec Product Suite

We are launching four new products that make open-source and AI model risk visible, actionable, and easy to remediate. Each one was designed to support both security and engineering workflows without adding complexity.

1. Coding Companion

AI coding assistants often suggest vulnerable, deprecated, or even hallucinated packages. Hopper flags these risks in real time before they ever reach your codebase. It helps developers write secure code from the start without disrupting their workflow.

2. Your AI AppSec Engineer

Security leaders are constantly fielding urgent questions:

- Am I impacted by this CVE?

- Which of my applications are the most exposed?

- Are we past SLA?

With Hopper, you don’t need to dig through dashboards or wait for reports. You just ask Grace, our AI AppSec Engineer.

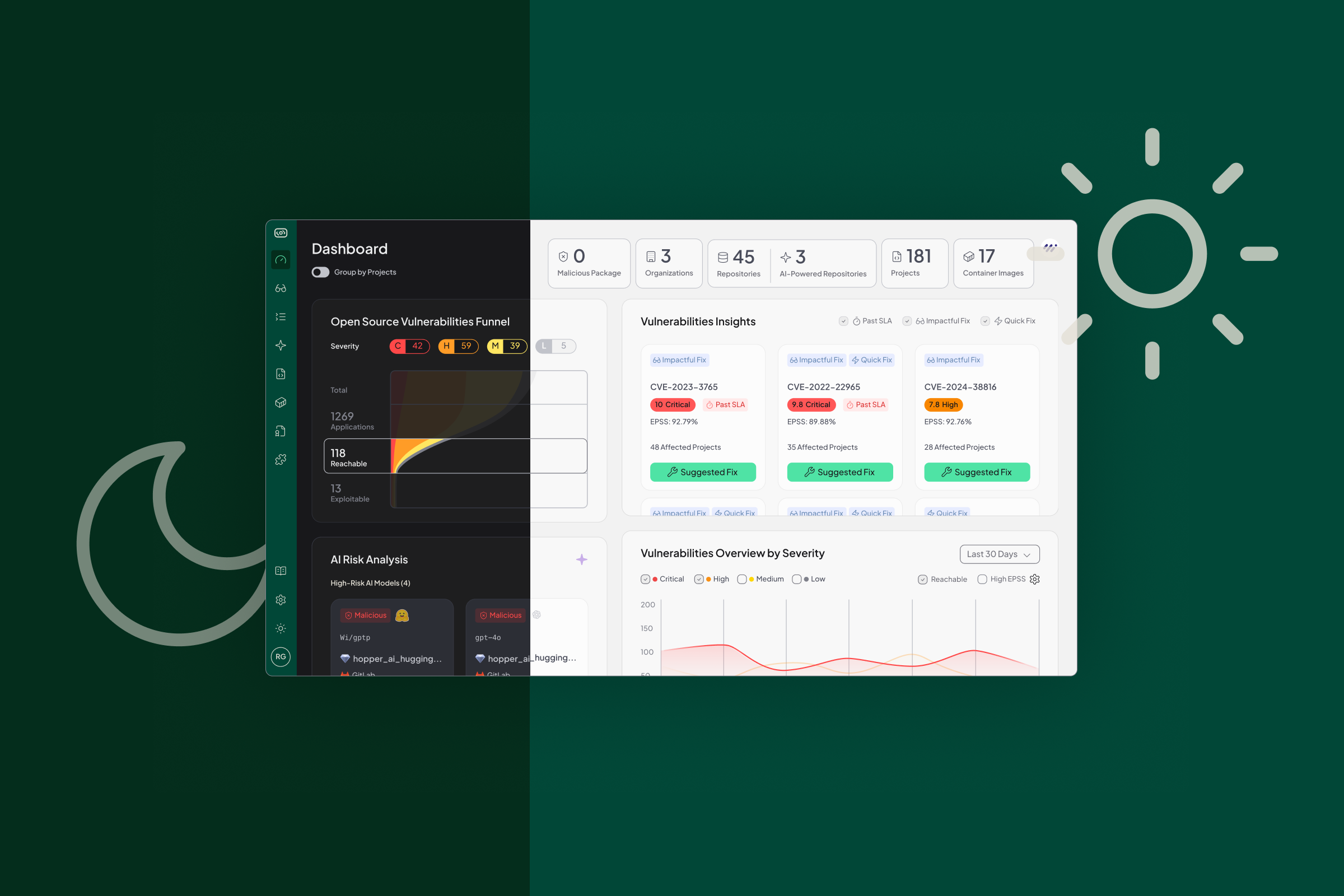

Ask Grace about CVE-2021-44228, and Hopper analyzes your environment, traces function-level reachability, and tells you whether your usage path exposes the vulnerable function. You get clear evidence, call graphs, and the full context immediately.

You can identify which internal packages carry the most risk, uncover vulnerabilities that are past their SLA, and surface the most impactful, low-effort fixes. Generate SBOMs, VEX files, and license reports with a single question. Understand where AI models are being used across your stack, and whether any of them introduce risk.

This is not just AI for security. This is how modern AppSec teams move faster, go deeper, and stay in control.

3. AI-BOM and Risk Analysis

Understanding where and how AI models are used across your stack is now critical. Hopper delivers a full AI Bill of Materials (AI-BOM), expanding your software supply chain visibility to include embedded and external models.

You see exactly which models are in use, where they are loaded, and how they were introduced. Hopper pinpoints the source across your repositories, files, and lines of code. It exposes risky behaviors such as insecure deserialization and flags unsafe, unsupported, or malicious models introduced through SDKs or open-source packages.

AI model usage is not just a security concern. It introduces governance, privacy, and compliance challenges. Hopper helps you track which models are processing sensitive data such as PII, PHI, or PCI, and verifies whether their use aligns with internal policies and regulatory requirements.

4. Remediation Assistant

Fixing vulnerabilities remains one of the most painful and time-consuming parts of AppSec. Backlogs pile up. SLAs get missed. Developers are left guessing which fixes matter most and what might break in the process.

The Hopper Remediation Assistant brings order and speed to the chaos. It delivers architecture-aware recommendations, maps potential breaking changes, and guides developers with the exact modifications needed to remediate. For every issue, Hopper surfaces the root cause, estimates the fix effort, and highlights the highest-impact, lowest-effort changes teams can make today.

By combining deep code understanding with function-level reachability, Hopper eliminates guesswork. Teams can resolve critical issues with confidence, meet remediation SLAs, and improve MTTR without slowing development down.

What’s Next

This launch marks a major step forward, but it is only the beginning.

We are building a new foundation for AppSec. One that speaks the language of modern software and meets teams where they are. With Hopper, we are bringing AI-native security to every corner of the software lifecycle, making it faster and easier for teams to ship secure code with confidence.

In the coming weeks, we will share more about each of these products, including real-world examples of how customers are using them to solve some of the hardest challenges in AppSec today.

If you are navigating open-source risk, embedding AI into your products, or simply looking for a better way to secure what you build, we believe Hopper can change the way you work.